Chapter1: Basics of Technical SEO

In this section, I will talk about the fundamentals of SEO, particularly why technical SEO remains crucial even in the year 2023. I’ll give you a clear understanding of what falls under the category of technical SEO and what doesn’t.

So, let’s get started…

What is Technical SEO?

In simple terms, Technical SEO is all about making your website user-friendly for search engines. Search engines want to be able to find, analyze, and display your website properly so that your organic rankings can increase.

Some key components of technical SEO include making sure the website is easily crawlable, indexed, optimally rendered, and has a well-structured architecture.

Why Technical SEO Important?

Even if you have the best website with top-notch content, if your technical SEO is not up to par, your website won’t rank well in search results. Search engines like Google need to easily find, explore, display, and index all the pages on your website.

However, that’s just the tip of the iceberg. To fully optimize your website for technical SEO, your pages need to be secure, optimized for mobile devices, free from duplicated content, and quick to load. Many other factors go into technical optimization as well.

It’s important to note that your technical SEO doesn’t have to be perfect to rank, but the better you make it for search engines to access your content, the better your chances of ranking higher.

Ways to Improve Technical SEO

As I mentioned before, technical SEO is about more than just crawling and indexing. To enhance the technical optimization of your website, many things to keep in mind, such as:

- Javascript

- XML sitemaps

- Website architecture

- URL structure

- Structured data

- Pages with minimal content

- Duplicate content

- Hreflang tags

- Canonical tags

- 404 error pages

- 301 redirects

And I might have missed a few others. But don’t worry! In the rest of this guide, I’ll cover all these topics and more so that you have a comprehensive understanding of technical SEO.

Chapter2: Navigation and Site Structure

From my perspective, the structure of your website should be the priority in any technical SEO effort. It’s even more necessary than crawling and indexing.

Why is this so? Well, crawling and indexing issues arise due to a poorly designed website structure. So, if you get this right, you won’t have to worry as much about Google indexing all of your pages.

Moreover, the structure of your site affects all other optimization tasks, including URL structure, sitemap, and using the robots.txt file to block search engines from certain pages.

Simply, a solid structure makes everything else in technical SEO easy way. So, let’s move on and explore the steps involved.

Use Organized and Flat Site Structure

The way you organize your website’s pages is called your site structure. It’s important to have a flat structure that means your pages should be easily accessible through a few links. It helps search engines like Google crawl all of your pages easily.

Additionally, having an organized structure helps avoid having “orphan pages” (pages with no links pointing to them) and makes it easier to identify and fix indexing issues. You can use the Semrush Site Audit tool to get a detailed view of your site structure or check out Visual Site Mapper for a more visual representation.

Consistent URL Structure

When it comes to your website’s URL structure, there’s no need to get too complicated, especially if you have a small site like a blog. However, it’s important to have a consistent and logical URL structure, as this helps users understand where they are on your site. Additionally, having different categories for your pages gives Google more context about each page.

Using breadcrumb navigation is also a great idea, as it automatically adds internal links to categories and sub-pages on your site, solidifying your site architecture.

Moreover, Google now displays breadcrumb-style URLs in search results, so when appropriate, we suggest using breadcrumb navigation.

Chapter3: Indexing, Crawling and Rendering

In this chapter, we’re focusing on making your website easily discoverable and accessible for search engines. I’ll guide you through identifying and resolving any crawl errors and ensure that search engine spiders can reach all the pages on your site quickly.

Spot Indexing Issues

If you want search engines to be able to crawl your website easily, you need to identify any pages that might hinder their ability.

Here are three ways to spot these problem areas:

Coverage Report

One great place to start is by checking the “Coverage Report” in Google Search Console. In this report, you will be able to see whether Google is unable to fully index or display the pages that you wish to appear in their search results.

Screaming Frog

Screaming Frog is known worldwide as the top crawler for a reason it simply works incredibly well. After addressing any problems in the Coverage Report, I suggest performing a complete crawl with Screaming Frog for the best results.

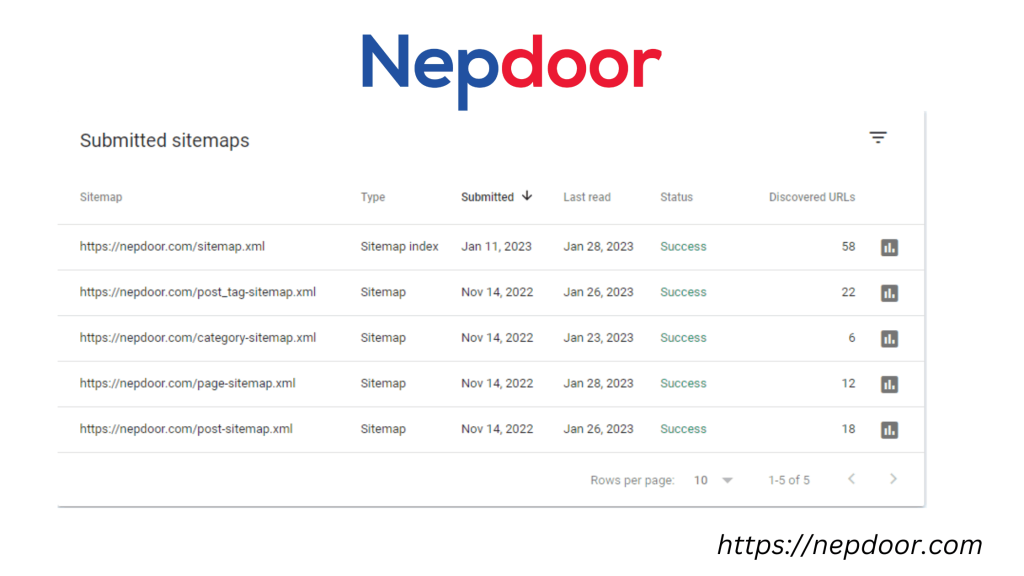

Use an XML Sitemap

Even in this era of mobile-first indexing and Accelerated Mobile Pages (AMP), is it still necessary for Google to have an XML sitemap to find your website’s URLs?

Yes, in fact a representative from Google stated that XML sitemaps are the second most important source for locating URLs. Despite not specifying the first source, it is likely external and internal links.

To make sure your sitemap is in good shape, you can check it using the “Sitemaps” feature in Google Search Console. It will display the sitemap that Google is seeing for your website.

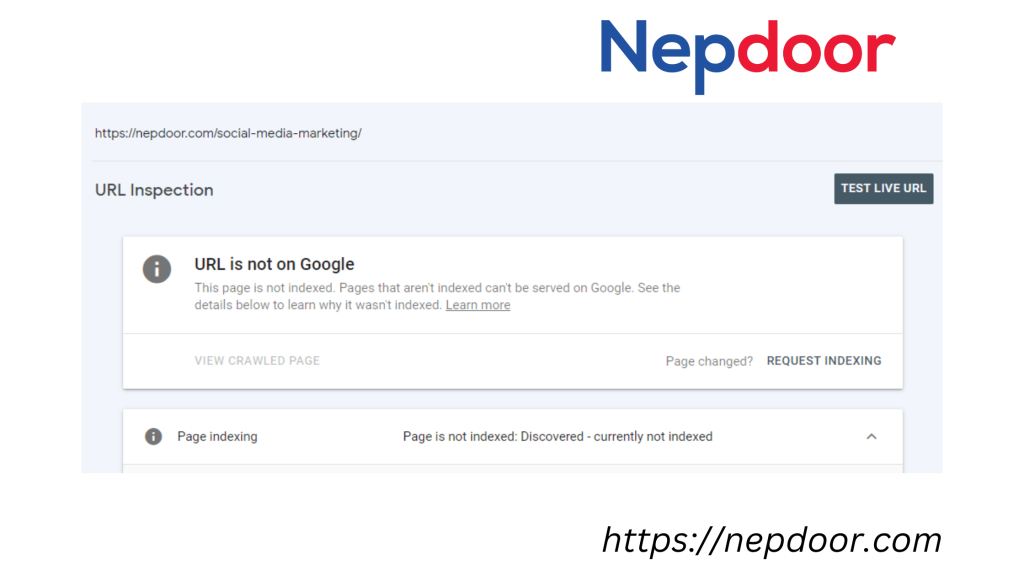

Inspection

If you’re having trouble with a URL on your site not appearing in search results, the Google Search Console’s Inspect feature can help you figure out what’s going on.

With this tool, you can not only find out why a page isn’t being indexed but also see how Google is rendering the page for those that are indexed. As a result, Google will crawl and index the entire page.

Chapter4: Thin and Duplicate Content

You shouldn’t worry about duplicate content on your website if you create unique and original content for each page. However, it’s possible for duplicate content to accidentally show up on any website, especially if your content management system creates multiple versions of the same page on different URLs.

As for thin content, it’s not a concern for most websites, but it can impact your overall rankings. Thus, finding and fixing these problems is a good idea. In this section, I’ll show you how to address duplicate and thin content on your website.

Use an SEO Audit Tool to Find Duplicate Content

There are two tools that work well for finding duplicate and thin content. The first one is Raven Tools Site Auditor. It will scan your website and let you know which pages need to be updated if it finds duplicate or thin content. The Semrush site audit tool also has a “Content Quality” section that can help you identify duplicated content on your website.

It’s important to note that these tools only focus on finding duplicate content within your website. If you find a snippet of text on another site, search for that text in quotes & if Google shows your page first in the results, you are good to go.

Remember, if someone else copies your content and puts it on their website, that’s their problem, not yours. You only need to worry about duplicate content that appears on your site.

No Index Pages that don’t have Unique Contents

Many websites have pages with similar content, but when search engines like Google index those pages, problems can arise. The solution is to add the “no index” tag to those pages, which tells search engines not to index them. You can use the “Inspect URL” feature in Google Search Console to make sure the no-index tag is working properly.

It may take a few days to a few weeks for Google to re-crawl the pages and remove them from the index. To check, you can go to the “Excluded” tab in the Coverage report in Google Search Console. For example, at Backlinko, we add a no-index tag to pages with paginated comments to avoid duplicate content issues. The robots.txt file can also be used to prevent search engine spiders from crawling a page.

Use Canonical URLs

Instead of adding the “no index” tag to pages with duplicate content or replacing the content with something unique, there is another solution: Canonical URLs. Such URL structures are ideal for pages with similar content but minor differences.

For instance, let’s say you own an e-commerce website that sells IT-related products and has a product page specifically for technology. Depending on how your site is structured, each variation of size, color, and style could result in a different URL. It’s not ideal, but there is a way around it. You can use the canonical tag to inform Google that the original version of the product page is the primary one and all other pages are just variations.

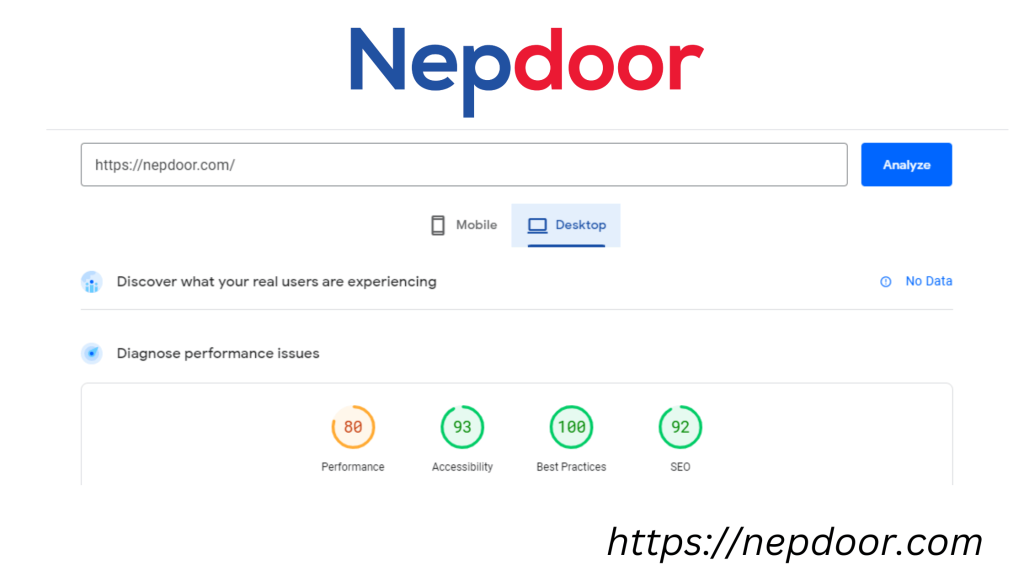

Unit5: Page Speed

You can directly influence your website’s search engine rankings by improving its page speed. Even though fast-loading websites are not guaranteed to rank on Google’s first page (backlinks are still required), organic traffic can be greatly improved.

In this section, I’ll walk you through three simple ways to improve your site’s loading speed.

Reduce Web Page Size

You may have heard of CDN, cache, lazy loading, and minifying CSS as page speed optimization techniques.

There is, however, another equally important aspect that is often overlooked: the size of the web page. Our research found that the total size of a page has a stronger correlation with load times than any other factor.

Although this negatively impacts our scores on Google PageSpeed Insights, I’ve decided to prioritize a visually stunning page over a fast-loading one.

It’s important to reduce your page’s total size if you want your site to run faster.

Eliminate 3rd Party Scripts

When a website has additional scripts from third-party sources, it can slow down the page’s loading speed by an average of 34 milliseconds. While some third-party scripts, such as Google Analytics, are essential, it’s a good idea to regularly review and assess if any unnecessary scripts can be removed to improve the website’s performance.

Chapter6: Extra Tips on Technical SEO

Let’s dive into some practical technical SEO tips!

We’ll cover the topics such as redirects, structured data, Hreflang, and other technical SEO elements.

Check Your Site for Backlinks

Although having a few dead links on your website won’t have a significant impact on your SEO, broken internal links can be problematic. Googlebot needs to be able to find and crawl your site’s pages, and broken internal links can make that more difficult.

Do You Want More Traffic To Your Websites? Click Here and get 15 min Free Consultation.

That’s why it’s a good idea to regularly perform an SEO audit and fix any broken links you find. You can use SEO audit tools like SEMrush or Screaming Frog to locate broken links on your site. Also by fixing internal broken links, you can ensure that Googlebot can crawl your site’s pages efficiently.

Implement hreflang for International Websites

If your website has different versions of a page for other countries and languages, the hreflang tag can improve your website’s performance. However, setting up the hreflang tag can be challenging and Google’s documentation on how to use it is not always straightforward. There is a tool that makes it relatively easy to create hreflang tags for multiple countries, languages, and regions.

Setup Structured Data

The schema may not directly improve your website’s SEO, but Rich Snippets can make your pages more noticeable in the search engine results pages, potentially resulting in more organic click-throughs.

Validate Your XML Sitemap

Managing a large website can be overwhelming, and it can be easy to miss broken or redirected links in your sitemap. The purpose of a sitemap is to show search engines all of your live pages, so it’s important to make sure every link in your sitemap is pointing to a working page.

Noindex Tag and Category pages

If you have a WordPress website, it’s a good idea to consider no indexing your category and tag pages. It is unless, of course, these pages generate a lot of traffic. It is because these pages don’t provide much value to users and can lead to duplicate content issues.

With Yoast, no indexing these pages is a quick and simple process with just one click.

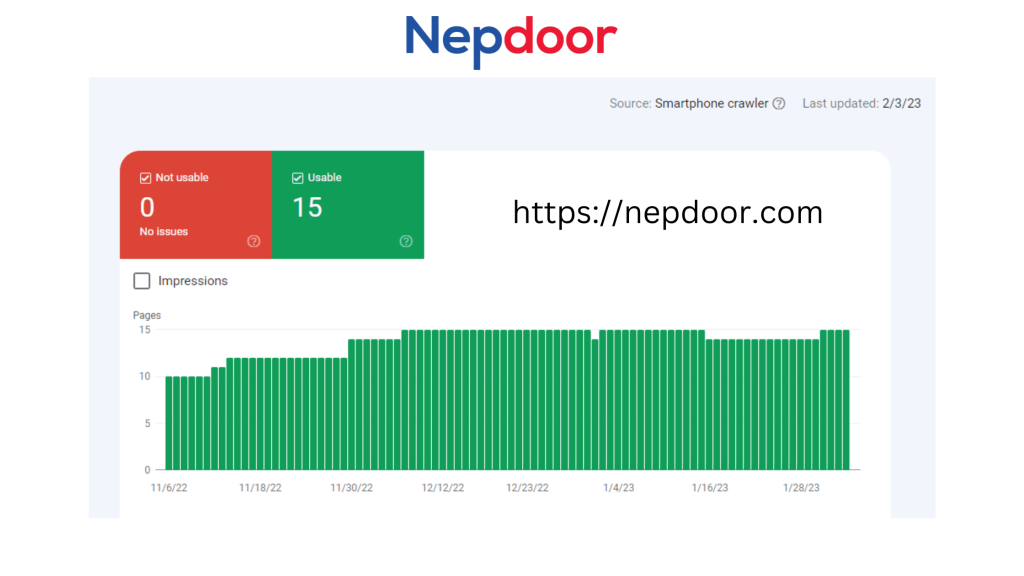

Check for Mobile Usability

It’s 2023, so it’s safe to say that having a mobile-optimized website is a must. However, even sites that seem to be perfectly optimized for mobile can still have issues that go unnoticed. If you’re not receiving complaints from users, it can be difficult to identify these issues. This is where the Google Search Console’s Mobile Usability report comes in handy.

Google will let you know if any of your pages are not optimized for mobile users and provide specific details about what needs to be fixed. In this way, you can ensure that all of your pages provide a great user experience for mobile visitors.

Conclusion

Thank you for taking the time to read my guide on technical SEO. Now, I would love to hear your thoughts and opinions. Which tip from this guide sparked your interest the most?

Are you planning to prioritize speeding up your website or do you want to focus on finding and fixing dead links? Or maybe there is another tip that caught your attention?

Please feel free to share your feedback by leaving a comment below. I would be happy to hear from you.

Comments